What Makes Large-Scale Data Collection Methodology for Web Scraping Deliver 65% Faster Results?

Nov 21

Introduction

Modern digital ecosystems demand speed, accuracy, and consistency, especially when organizations rely on thousands of daily data points for competitive decision-making. Businesses now operate in an environment where reliable information must be acquired at scale and processed into actionable insights at exceptional speed. Here, a Large-Scale Data Collection Methodology for Web Scraping creates a unified framework that supports rapid, stable, and scalable extraction processes without overwhelming internal infrastructures.

This shift toward automation has increased reliance on sophisticated workflows that support parallel data pipelines, continuous collection, and adaptive extraction layers designed to handle complex website architectures. Moreover, industries tapping into Review Scraping Services and broader data-driven intelligence ecosystems increasingly prioritize methodologies that guarantee reduced latency and predictable uptime.

Companies adopting scalable extraction mechanisms report up to 65% faster data delivery cycles compared to traditional scraping approaches. This performance boost not only improves competitive agility but also helps teams execute analytics workflows with confidence. As a result, businesses using high-capacity scraping frameworks experience measurable improvements in forecasting, demand tracking, and operational planning.

Methods That Improve Reliability in High-Volume Pipelines

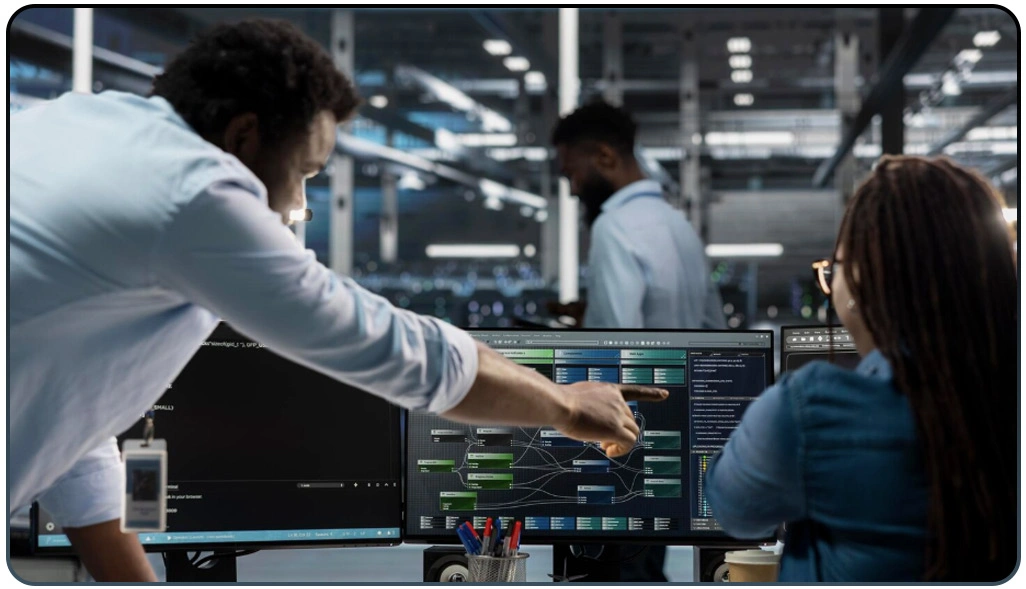

High-volume extraction environments depend on structured workflows that ensure stability, responsiveness, and consistency across diverse web sources. Effective systems prioritize multi-threaded processes, dynamic queue management, and fault-tolerant logic to maintain performance even when websites change layout or structure. The integration of Automated Large-Scale Data Extraction contributes to smoother operations by minimizing delays and distributing tasks efficiently.

As workflows scale, companies enhance predictability by implementing validation rules that reduce duplication, detect inconsistencies, and strengthen accuracy. Adaptive models powered by AI Web Scraping Revolutionizes Data Collection allow systems to recognize shifting HTML patterns, enabling quicker adjustment without manual intervention.

The adoption of a streamlined Web Scraping API also improves communication between internal systems and external data sources, allowing pipelines to refresh at higher speeds while maintaining clean output. With these mechanisms in place, organizations experience shorter turnaround times and more reliable datasets for analytical workflows, demand forecasting, and performance monitoring.

Comparative View of Extraction Workflow Efficiency:

| Parameter | Traditional Setup | Enhanced Pipeline |

|---|---|---|

| Processing Volume | Moderate | High |

| Latency | Higher | Lower |

| Data Consistency | Variable | Stable |

| Overall Success Rate | Limited | Improved |

These refinements result in more dependable intelligence streams, helping teams convert large volumes of online information into actionable insights.

Systems That Maintain Stability in Distributed Capture Operations

The integration of Live Crawler Services enhances real-time visibility by enabling continuous refresh cycles and ensuring that extracted data reflects the latest available information. These adaptive mechanisms are valuable for industries tracking rapid market movements, shifting prices, or frequent content updates. To reinforce structural integrity, teams incorporate Automated Data Scraping Tools that streamline repeated tasks and sustain long-duration sessions with minimal disruption.

Workflow enhancement also benefits from automated routing systems that shift tasks between nodes when performance fluctuations occur. Consistency further improves when pipelines adopt API Alternative Data Scraping techniques that reduce redundancy and maintain efficient transfer between systems.

Performance Metrics for Distributed Environments:

| Metric | Non-Distributed Setup | Distributed System |

|---|---|---|

| Throughput | Limited | Higher |

| Downtime | Frequent | Minimal |

| Processing Stability | Variable | Reliable |

| Refresh Cycle | Slower | Faster |

With these improvements, distributed environments offer greater resilience, enhanced visibility, and predictable performance across large-scale online datasets.

Techniques That Increase Accuracy and Strengthen Data Transformation

Adaptive systems powered by AI Web Scraping Services help detect irregularities and respond to changes in web structures with higher precision. Machine-driven validation models pinpoint unusual patterns and optimize correction mechanisms before data flows into analytical tools. This ensures that teams maintain clarity and consistency even when dealing with complex layouts.

Transformation pipelines also gain efficiency through modular mapping strategies that convert raw attributes into standardized values. The inclusion of Advance Enterprise Data Extraction enhances the ability to streamline workflows and reduce manual interventions. Meanwhile, businesses relying on user feedback datasets benefit from Modern Review Data Extraction Services, which help organize sentiment-driven information for operational and strategic decision-making.

Quality Improvements After Optimization:

| Indicator | Before Optimization | After Optimization |

|---|---|---|

| Accuracy Level | Lower | Higher |

| Duplication | Higher | Reduced |

| Error Detection | Manual | Automated |

| Processing Speed | Slower | Faster |

These advancements give organizations the ability to build cleaner, more structured intelligence layers that support forecasting, competitive tracking, and in-depth data modeling.

How Web Data Crawler Can Help You?

Modern enterprises require dependable extraction systems capable of managing massive datasets at scale, and this is where a strategically designed framework becomes essential. By integrating a Large-Scale Data Collection Methodology for Web Scraping within our service architecture, we enable businesses to capture structured intelligence from complex online sources with speed, precision, and reliability.

What You Get with Our Expertise:

- Stable long-running extraction pipelines.

- Real-time monitoring and accuracy checkpoints.

- Secure architecture with privacy-safe protocols.

- Rapid deployment for new data requirements.

- Structured outputs aligned with enterprise needs.

- Smarter workflow orchestration for high-volume processing.

In the final stage, our team ensures seamless data delivery, optimized processing, and intelligent transformation supported by API Alternative Data Scraping, giving businesses a clear path to consistent and scalable insights.

Conclusion

Building a sustainable intelligence ecosystem requires accuracy, speed, and structured workflows that maintain consistency across massive data volumes. Companies adopting a deep analytical approach supported by a Large-Scale Data Collection Methodology for Web Scraping experience dramatically faster delivery cycles and reduced operational challenges.

By reinforcing your infrastructure with advanced methodologies and intelligently designed pipelines driven by Automated Data Scraping Tools, your organization can consistently accelerate performance and strengthen long-term intelligence capabilities. Connect with Web Data Crawler today to transform your large-scale data extraction strategy into a faster, smarter, and more reliable intelligence engine.