How to Extract an Indian Grocery Database via Pictures and UPC Codes with 50K+ Items Accuracy?

Jan 09

Introduction

India's grocery ecosystem is vast, fragmented, and constantly evolving. From regional FMCG brands to hyperlocal private labels, retailers and researchers struggle to maintain a unified, accurate, and scalable product database. This is where image-based recognition and standardized UPC mapping become essential for building a dependable grocery intelligence foundation.

Modern data pipelines integrate image recognition, barcode validation, and pricing normalization to support catalog enrichment at scale. When implemented correctly, these systems reduce human dependency and accelerate time-to-insight for pricing teams, supply chain planners, and category managers. The growing adoption of Web Scraping Grocery Data techniques has further strengthened these processes by enabling continuous updates from multiple retail sources.

When aligned with visual data capture, scraping allows enterprises to track packaging changes, variant launches, and regional availability in near real time. This holistic approach makes it possible to Extract an Indian Grocery Database via Pictures and UPC Codes with accuracy levels exceeding 99% across tens of thousands of SKUs, forming a reliable backbone for analytics-driven retail strategies.

Resolving Product Identity Challenges Across Retail Channels

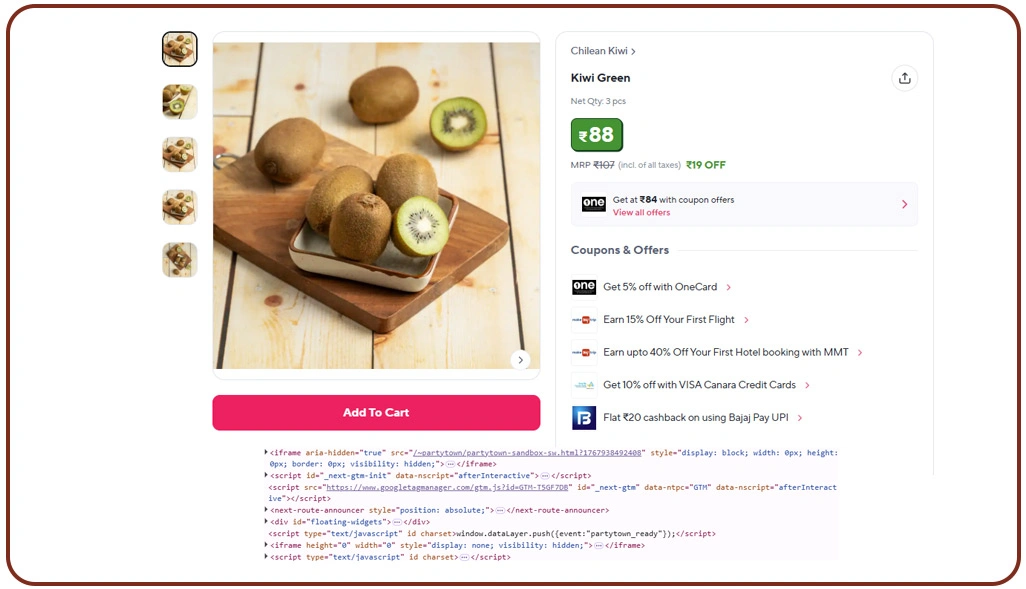

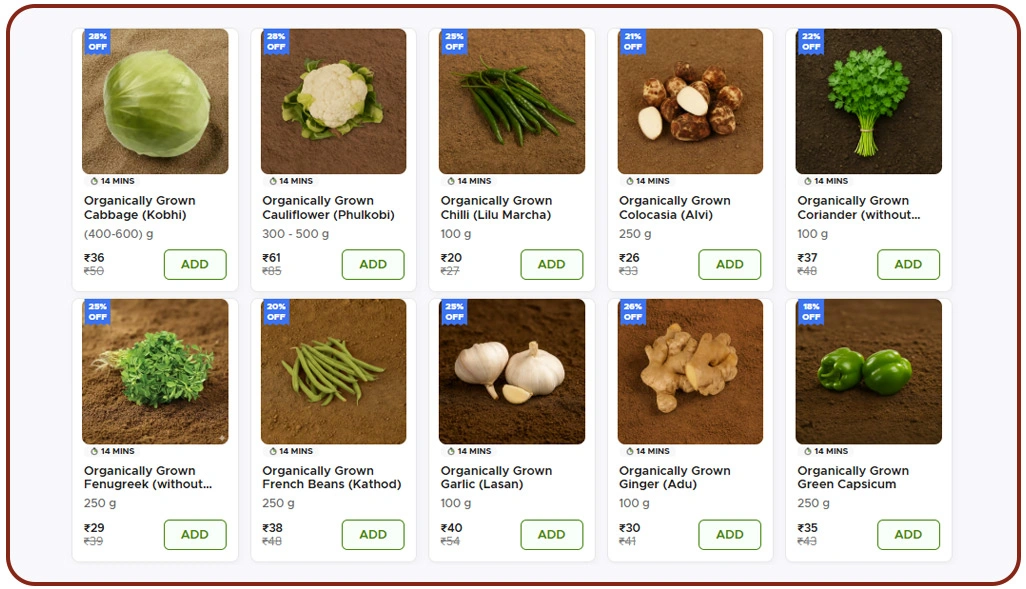

Indian grocery ecosystems suffer from inconsistent product identities due to regional naming variations, frequent packaging refreshes, and incomplete catalog standards. These inconsistencies create duplicate SKUs, mismatched prices, and unreliable analytics outputs. Businesses attempting to consolidate grocery intelligence often face serious data hygiene issues that affect forecasting accuracy and assortment planning.

Product images help match visual attributes such as label design, brand placement, and pack size, while barcode validation ensures manufacturer-level uniqueness. When both data points are validated together, ambiguity is significantly reduced. Research indicates that visual-code mapping improves SKU accuracy by over 35% compared to text-based matching models.

This approach also complements Popular Grocery Data Scraping, enabling structured ingestion of new listings as they appear across online grocery platforms. Continuous ingestion ensures faster discovery of private labels, regional SKUs, and promotional variants. By validating identifiers at the point of ingestion, enterprises can prevent catalog inflation and maintain clean product hierarchies.

| Data Accuracy Metric | Traditional Methods | Visual + Code Method |

|---|---|---|

| Duplicate Product Records | High | Very Low |

| SKU Matching Precision | Moderate | Extremely High |

| Time to Validate New Listings | Multiple Days | Few Hours |

Additionally, UPC Code Data Scraping for Indian Groceries ensures that every visual match is anchored to a verified identifier, minimizing false positives and improving downstream analytics reliability. This structure supports faster onboarding, accurate price benchmarking, and consistent reporting across regions.

Designing Scalable Intelligence Layers for FMCG Analytics

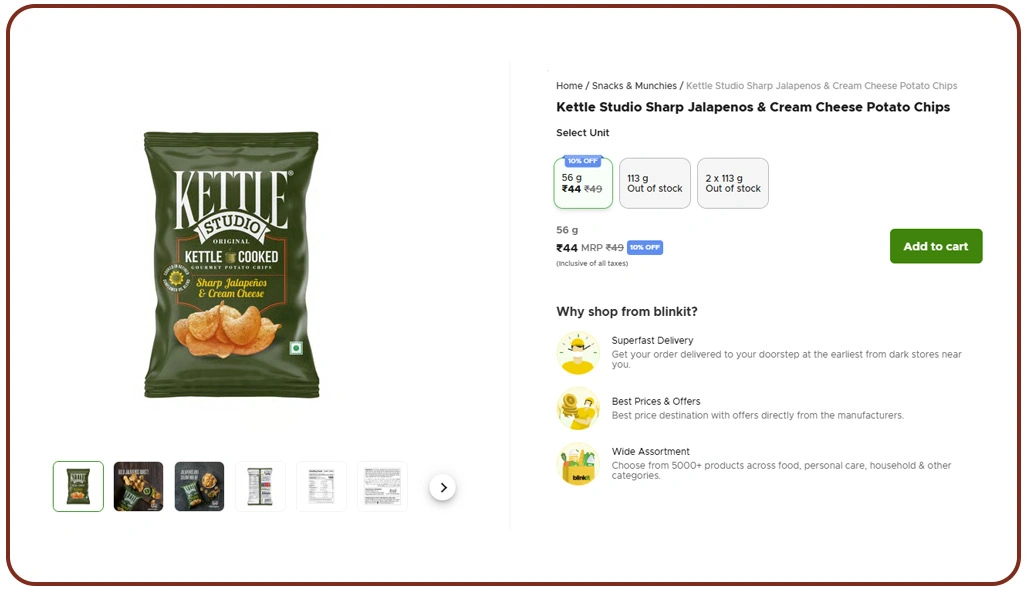

Once product identities are resolved, the next challenge lies in transforming raw inputs into enterprise-grade intelligence. Grocery data is inherently multi-dimensional, involving prices, availability, consumer feedback, and category hierarchies. Without proper structuring, even accurate data remains underutilized and difficult to query.

Modern intelligence systems rely on normalization layers that align pricing formats, standardize units of measurement, and maintain historical versions of product records. This allows analysts to track lifecycle changes, promotional effects, and regional assortment shifts over time. Scalable ingestion architectures are essential to handle high SKU volumes without compromising data quality.

The adoption of Enterprise Web Crawling infrastructure enables organizations to manage large-scale data extraction with stability, compliance handling, and automated retries. These systems outperform basic scrapers by ensuring uninterrupted data flows and structured outputs suitable for BI tools and dashboards.

| Intelligence Component | Operational Benefit |

|---|---|

| Normalized Price Records | Faster Competitive Analysis |

| Review-to-SKU Mapping | Sentiment-Driven Decisions |

| Versioned Product History | Lifecycle Trend Analysis |

Another critical component is Retail Grocery Review & Rating Data Extraction, which links consumer sentiment directly to verified SKUs. When reviews are mapped to normalized product records, enterprises gain deeper insight into how pricing, packaging, or formulation changes impact customer perception. This connection transforms static product data into actionable intelligence.

Maintaining Long-Term Accuracy in Dynamic Markets

Creating a high-quality grocery database is only the first step; sustaining its accuracy over time is a far greater challenge. Indian retail markets evolve rapidly, with frequent SKU additions, packaging updates, and location-specific assortments. Static datasets quickly become outdated, leading to incorrect insights and flawed decisions.

Automated monitoring systems address this challenge by continuously validating images, identifiers, and pricing data. A robust Web Crawler framework detects visual changes, identifies mismatched identifiers, and flags anomalies for review. This proactive monitoring significantly reduces data decay and ensures that product records remain current.

Automation also enables timely detection of discontinued items and newly launched variants. Enterprises using continuous validation report up to a 40% reduction in catalog inconsistencies within the first six months. This accuracy directly improves demand forecasting, promotion planning, and inventory alignment across regions.

| Validation Area | Automation Outcome |

|---|---|

| Packaging Change Detection | Early SKU Updates |

| Identifier Consistency Checks | Reduced Data Errors |

| Regional Availability Monitoring | Improved Forecast Reliability |

Integrating Scraping Indian FMCG Items With UPC and Price Details further strengthens this process by ensuring that price movements and availability changes are always tied to verified product records. The result is a living database that evolves alongside the market and supports confident, data-driven decisions.

How Web Data Crawler Can Help You?

Building a scalable grocery intelligence system requires precision, automation, and domain expertise. Our solutions are designed to support enterprises aiming to Extract an Indian Grocery Database via Pictures and UPC Codes while maintaining long-term accuracy and compliance.

Our approach includes:

- Advanced visual recognition aligned with barcode validation.

- Automated data normalization across categories and pack sizes.

- Scalable ingestion pipelines for high SKU volumes.

- Quality assurance frameworks with anomaly detection.

- Secure data delivery in analytics-ready formats.

- Ongoing monitoring to prevent data decay.

By integrating consumer perception insights alongside verified product records, our systems also support Retail Grocery Review & Rating Data Extraction, enabling richer, more actionable grocery intelligence for enterprise teams.

Conclusion

Accurate grocery intelligence begins with a foundation that blends visual data and standardized identifiers. When implemented at scale, it becomes possible to Extract an Indian Grocery Database via Pictures and UPC Codes that supports pricing, assortment, and market research decisions with confidence and clarity.

Enterprises seeking long-term value should invest in solutions that evolve with the market. By adopting systems such as an Indian Grocery Item Scraper With UPC Codes, organizations can future-proof their data strategy. Connect with Web Data Crawler today to build a reliable grocery database that fuels smarter retail decisions and measurable growth.